The biohack.me forums were originally run on Vanilla and ran from January 2011 to July 2024. They are preserved here as a read-only archive. If you had an account on the forums and are in the archive and wish to have either your posts anonymized or removed entirely, email us and let us know.

While we are no longer running Vanilla, Patreon badges are still being awarded, and shoutout forum posts are being created, because this is done directly in the database via an automated task.

EEG help

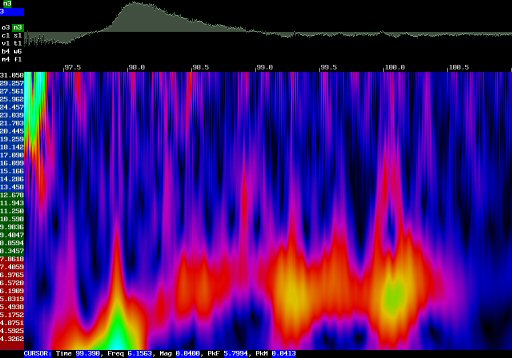

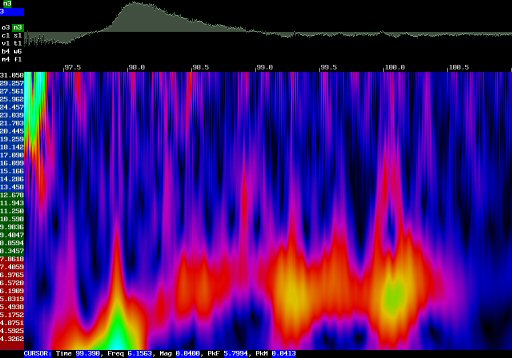

I was being serious when i was talking about that EEG guitar, however I have a question about EEG's though. How do I read this and what does it mean?

Here is a link to the site:

http://users.dcc.uchile.cl/~peortega/ae/

Sincerely,

John Doe

Here is a link to the site:

http://users.dcc.uchile.cl/~peortega/ae/

Sincerely,

John Doe

Comments

Displaying all 24 comments

-

Well that's clearly an alpha rhythm. It's pretty slow though.

-

Are you writing your own program to convert the data to musical notes in a synthesizer or using a preexisting vst/standalone plugin?

-

@Cassox How can you tell that it is a alph rhythm and how can you tell that is is slow, and ware would a good place to read about brain waves be? Thanks for your explanation! Sincerely, John Doe @MTS I am designing a guitar that will have strings in the neck to be played by robot arms inside. I am having to design/make/problem solve most of the stuff as I go, honestly I am writing the books as I go for example I all ready know a EEG and mechanism will be to slow for this purpose. My plan to counter that by being a little ahead of the tempo of the song to account for the delay. Another example is how to shape cords.(Please correct me here if I am wrong). There are beta gamma and alpha brain waves and all can be measured together. There by the spikes in each wave will create a unique "code" that will allow for me to shape and play all possible cords and tricks.(slides muting etc etc) From my understanding that is the latest in EEG technology. I will soon start a portfolio and when/if appropriate a build thread to show my progress. Thank you for any thoughts you may have. Sincerely, John Doe

-

Actually I'm fucking with you man. I have no idea whats up with that eeg.

-

Ok intentional delay sounds like a good fix. One thing's for sure, whateve algorithm you create to program the delay, it'll have to modulate based on the type of brianwaves being picked up, which would be hard to do. Because you would have to keep changing how far ahead you are in the song based on whether you were emitting slower or faster brainwaves. I'm not sure about everything else as I'm just a music producer, but I'm excited to see what you come up with!

-

Yeap! Clearly alpha!

Seriously though: I don't have a lot of hands-on experience with EEG, but know a bit about it. If you want to learn the basics, a good place to start as always is wikipedia. https://en.wikipedia.org/wiki/Electroencephalography#Normal_activity

I followed a BCI summer course this summer at Radboud in the Netherlands. That was very helpful. Unfortunately I don't really have a coursebook or anything (just my illegible notes). But if you need some links to online tutorials or free (!) EEG software, let me know and I'll post some links here.

The picture looks like a frequency (y-axis) * time (x-axis) plot (bit hard to read because of pic quality). The y axis is probably split into theta, alpha, and beta waves (4-8 Hz; 8 - 12/14; 12/14 >, respectively). The distinction between alpha and beta isn't very clear. I think people often use slightly different cut off points.

So you want to somehow influence your own EEG pattern. For your plan to work you'd have to do so very accurately (in different frequency bands). I don't think that's gonna work. One way of doing what you intend would be to use mental imagery (like imagining to move your hands) and then map one chord under one hand. Two hands: so that gives you two chords you can 'play' with your thoughts. Once you get that to work, you can add other motor imagery like tongue, feet. But this is quite difficult, even in a controlled lab setting with high grade EEG hardware.

Easier would be to use SSVEP. https://en.wikipedia.org/wiki/Steady_state_visually_evoked_potential This measures your brain activity in response to a visual stimulus (this is called synchronous), instead of a-synchronous (system works whenever the user uses the BCI). Still, I think SSVEP could work quite well for your purpose. It works like this: You create a simple interface that consists of checker boxes that flash at different frequencies. Say you have one box that says ´C major´, and one that says ´E minor´. Because they flash at different frequencies, the BCI software will be able to tell which one you´re looking at by looking at the EEG data from your visual cortex. (You can add much more chords btw, this is just an example.) Then it's just a matter of linking the BCI software to your robot guitar player. If C, then robot play C. One possible problem with this method is that you might need a few seconds before the system can tell which box you're looking at, which might be too slow (depending on song of course).

In that summer school we found out this method is much easier than motor imagery or any other a-synchronous BCI. There's software out there that is pretty much ready to use for this purpose. One group was able to get something like this working (without the robot guitar player) in one day. All other groups that used motor imagery didn't have enough time or good enough a signal (noise, unreliable). Motor imagery also requires a lot of training, calibrating, etc. SSVEP works for everyone, because everyone's visual cortex works pretty much the same. If you were thinking of using slow cotrical potentials, forget about it. Way too slow for your purpose.

The only caveat is that you´ll need a good SNR and good electrodes too. You´re making your own electrodes like the guy in that link?

EDIT: you might not even need to add delay if you use SSVEP. It might be fast enough if all you wanna do is select a chord. It can't be much slower than physically switching between chords (which works because you know when you need to switch, how much time it takes to switch hand position, etc.). If you know when the next chord is coming up, you can switch (i.e. look) to the next flashing box you need to play. You'll probably quickly learn how long it takes for the program to switch. Or maybe make it so that whenever one chord is selected, it is played for at least one/two/four measures (whatever you need for the song). Then during that measure you can select the next chord and it will be queued for the next measure. Showing the user this info (current chord, next chord if one is selected, time until next chord) would be helpful.

-

@Slach Dang that's something to think about, but the question is if the hardware I have already maded will mark with this approach.... Also I have to find more in depth information before I make a final call.

-

Look into the P300 as well

-

As for the project in general, forget triggering particular techniques from EEG - focus on simple melodic lines and chords and keep the melodic lines within particular scales.If you make mistakes or the EEG is inaccurate (and it will be at times) then it's better to hit a wrong note within the scale rather than doing a random dissonant pinch harmonic (unless of course random dissonant pinch harmonics are your thing).Not to mention the physical complexity involved. To be honest, unless you put the guitar in an open tuning and stick to major only or minor only and barre whole frets, i'm skeptical you will get a robot playing chords cleanly without a massive amount of work, but you could easily enough have a slide controlled by an actuator and a simple strumming mechanism.

-

Sorry for triple posting, but one more thing comes to mind:Try and get this working purely in software with a synth first - BCIs are complex enough and robotic guitarists are complex enough without merging the two together.Even if you just have simple control of spitting out desired chords and scale degrees (remember - keep to one key for simplicity) at first that'd be a cool thing you can build on.

-

Reiterating @garethnelsonuk 's posts, I think that you should work on getting the BCI to start producing melodies and notes before you incorporate a robotic guitar. I'm sure that EEG -> MIDI could have a wide range of musical applications, but I am curious to see what you come up with!I remember reading an article about a producer who made "robot jazz" and I think modulated the music using accelerometers and (maybe?) EEGs. I'll try to find and link his website.

-

I agree with what you have said, I almost built the guitar first but opted against it. How far off do you think it could fall out of tune? What would the single point of failure be of the accuracy of a eeg failing? Could a average between two sets of the electordes on the frontal lobe keep it in check? I was also reading about the risk of electric shock if it is built with a defect. Could I post photos of the eeg just in case someone may find a little problem that may or may not be there I also have a friend that will look over it before I wore my self to it....

-

Edit: I see and I agree may build a prototype for the guitar that keeps it as small width wise as possible, I feel like a synth can be a start to straying from the path....

-

While MIDI can't go "out of tune", I think what @garethnelsonuk was getting at was to "quantize" the MIDI notes to a certain musical scale (similar to the "Scale" MIDI effect in Ableton Live, if you're familiar).The "guitar" part of your project should just be something that can receive MIDI input; which has been done before. By sticking with MIDI, you can just worry about decoding EEG input while still having flexible/musical output. I don't think using a synthesizer would be straying from the path; all you would really need is just a software synthesizer to test that your device/program is outputting something.

-

What about using EEG to automate effects for live performances? Sort of like the glove that Imogen Heap has been developing..

-

@_mz_o__

I was under the impression he was talking about interference with the EEG sensors. Still that's nice to know about MIDI may run several I don't like the idea of placing all potential strain on one cable. There are a lot of ways that this could go wrong, better start making a list.... I am still pondering if there would be any real advantage to invasive needle EEG electrodes. I can't find a good write up on them, that's not over my head.

Sincerely,

John Doe

@MTS

That has crossed my mind I was thinking about putting as many on bored as possible and then any that are an the floor will always be on and keeping to the personality of the guitar, routing everything else through electro-mechanical switching. I feel this must be said when I say on bored I do not mean things like distortion of fuzz, but rather something like the rainbow machine from EarthQuacker devices. The kind of stuff to strange for words, yet makes me smile a little. Yea I am a little off the wall when it comes to effect pedals.

Sincerely,

John Doe

-

I was talking about both - EEG is always going to have some lack of precision, and for that reason you won't ever be able to target a precise note, so it's best to make sure that the note is ALWAYS within a particular scale.

-

One thing i'd like to suggest: Get hold of an OCZ NIA and play a game with it, you'll see it takes a certain amount of practice (meditation helps a lot) to get decent control.

-

Okay I am waiting on parts for my ModulerEEG but that will be one of the softwares I look at....

-

It's hardware, not software:

-

Is that NIA working off of EEG signals, or EMG signals? They aren't really clear.

-

To be honest, it's kinda both - it's EEG with lots of EMG interference. When gaming, you can fire by raising your eyebrows and move by relaxing mentally.

-

That's interesting but I don't have the money at the moment I am between jobs at the moment and I have a welding project to hit hard.... @garergnelsonuk Also did you ever look the my bio-input method I posted on your implantable Edison project thread?

-

I looked but didn't quite follow - can you post more on that thread to explain it?

Displaying all 24 comments